How to Secure Your Docker Applications with Nginx and HTTPS

Transform your Docker application into a production-ready service with a custom domain, HTTPS, and automated TLS certificate renewal - all using free, industry-standard tools.

Introduction

This article builds on my previous guide about deploying a Docker web server on a VPS. While functional, the current setup exposes the server directly on port 80 without TLS encryption. We'll address these limitations by adding Nginx as a reverse proxy and securing traffic with TLS using LetsEncrypt certificates.

Prerequisites:

A Docker web server running on port 80

A domain or subdomain with DNS management access

Understanding Nginx and deployment options

Overview

Nginx is a mature web server that functions both as a reverse proxy and static file server, amongst other things. As a reverse proxy, it provides an additional security layer by concealing backend implementation details and offers features like rate limiting, TLS termination, request routing, and much more.

Deployment Approaches

When setting up Nginx as a reverse proxy, you'll need to choose between installing it directly on your host system or running it as a containerized service. Each approach has distinct implications for how Nginx interacts with your backend services, manages configurations, and handles TLS certificates. The choice largely depends on your existing infrastructure and personal preferences.

Host installation

Running Nginx directly on the host offers several advantages:

Ability to proxy to any service, containerized or not

Direct access to host filesystem for static content

Simpler initial setup and configuration

Key limitations:

Management and monitoring separate from Docker ecosystem (via systemd)

Docker services must expose host ports for Nginx connectivity

Scaling up a Docker service using replicas will lead to port conflicts, since two containers cannot listen on the same host port. To work around this each replica needs to listen on a different host port and Nginx needs to be configured to be aware of them, so that it can balance the load across them.

Docker installation

Deploying Nginx in a container provides:

Native Docker network integration.

Automatic DNS resolution between containers

Can make use of Docker’s built-in load balancing for scaled services. Services are scaled up and down without having to modify the Nginx configuration. (That being said, Nginx load balancing is more feature-rich than Docker’s.)

However, this approach requires:

Volume management for configuration and TLS certificates

More complex initial configuration

Additional networking setup to proxy traffic to non-containerized services running on the host

This article will focus on the first method, host installation, as it provides greater flexibility for proxying both containerized and traditional services, and is the simpler of the two.

Initial setup

Before installing Nginx, we need to prepare the host system. Since Nginx will handle incoming traffic on port 80, we'll need to stop any existing services using that port. In our case, that's the Docker container from the previous article:

$ docker stop appNext, we'll configure the firewall to allow HTTP and HTTPS traffic. Ubuntu's UFW (Uncomplicated Firewall) provides a straightforward way to manage these rules:

$ sudo ufw statusExpected output:

Status: active

To Action From

-- ------ ----

Nginx Full ALLOW Anywhere

Nginx Full (v6) ALLOW Anywhere (v6)If you don't see these Nginx rules, add them:

$ sudo ufw allow 'Nginx HTTP'This single rule enables both HTTP (port 80) and HTTPS (port 443) traffic. If your firewall isn't active yet:

$ sudo ufw enableInstalling Nginx

On Debian systems, install Nginx using apt:

$ sudo apt update

$ sudo apt install nginxFor other distributions, refer to the official installation instructions

After installation, Nginx starts automatically. Verify the service status:

$ systemctl status nginxExpected output:

● nginx.service - A high performance web server and reverse proxy server

...

Active: active (running) since Sun 2024-10-13 16:26:23 UTC; ...To verify the installation, we'll access Nginx's default landing page. First, find your VPS's public IP address:

$ curl ip.nowExample output:

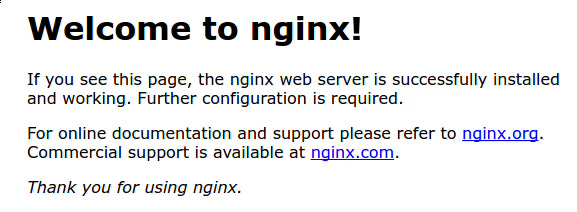

12.34.56.78Visit this IP address in your browser. You should see the default Nginx welcome page, confirming that both the installation and port 80 access are working correctly.

Understanding Nginx configuration

Default configuration

Let's examine Nginx's default configuration at /etc/nginx/sites-available/default to understand how Nginx handles requests:

$ cat /etc/nginx/sites-available/defaultThe key components are:

listen 80 default_server;

listen [::]:80 default_server;This configures Nginx to accept both IPv4 and IPv6 connections on port 80. The default_server directive makes this block handle any requests that don't match other server blocks.

root /var/www/html;root defines the base directory for static file serving. We’ll explore this soon.

index index.html index.htm index.nginx-debian.html;index the file lookup order within the root directory.

server_name _;The underscore (_) is a catch-all character that makes this server block catch all unmatched domain requests. Currently, there are no other server blocks, so this will catch all requests.

location / {

try_files $uri $uri/ =404;

}This location block implements a simple request handling logic:

Attempt to serve the exact URI

Try serving it as a directory

Return 404 if nothing matches

To find the default landing page, take a look at the directory that root is pointing to:

$ ls /var/root/html

index.nginx-debian.htmlWhile we'll be configuring Nginx as a reverse proxy rather than a static file server, understanding this default configuration provides context for our next steps.

Configuring Nginx as a reverse proxy

While we could modify the default configuration to set up our reverse proxy, it's better to create a separate configuration file for each domain. This approach makes it easier to manage multiple services and domains on a single VPS.

We'll create a new configuration file named after our domain. Using the domain name as the filename is a common convention that makes it clear which service the configuration belongs to:

$ sudo vim /etc/nginx/sites-available/api.kkyri.comYou should replace

api.kkyri.comwith your own domain

Add this configuration:

server {

listen 80;

listen [::]:80;

server_name api.kkyri.com;

location / {

proxy_pass http://localhost:8080;

include proxy_params;

}

}Let's break down what makes this configuration different from the default:

server_name api.kkyri.comtells Nginx to apply this configuration only for requests to this specific domain (it looks at the request’sHostheader to determine this)proxy_passforwards requests to our application instead of serving static filesinclude proxy_paramsadds standard proxy headers likeX-Real-IPandX-Forwarded-For, which help our application understand the origin of the request

This domain-based configuration approach is particularly powerful - you can host multiple services on your VPS by creating additional server blocks with different domain names, each proxying to their respective services. For example, I could add another configuration for docs.kkyri.com, which points to a different underlying service.

Enable the configuration by creating a symbolic link in sites-enabled:

$ sudo ln -s /etc/nginx/sites-available/api.kkyri.com /etc/nginx/sites-enabled/Validate the configuration:

$ sudo nginx -tBefore restarting Nginx, we'll need to ensure our application is running on port 8080. Let's handle that in the next section.

Setting up the backend service

Now we need to reconfigure our Docker service to run on port 8080 instead of 80, matching our Nginx configuration.

Update the port mapping in your compose.yaml:

services:

app:

image: app:latest

container_name: app

ports:

- "8080:8080" # Changed from 80:8080

...The port mapping format is

HOST_PORT:CONTAINER_PORT. We're changing only the host port since that's what Nginx will connect to. The container's internal port remains the same.

Deploy the changes:

$ docker compose up -dFinally, apply the new Nginx configuration:

$ sudo systemctl reload nginxAt this point, Nginx should be forwarding requests from port 80 to your application running on port 8080. The next step is configuring DNS to route traffic to your VPS.

Configuring DNS records

While Nginx is now set up to proxy requests, we need to configure DNS to route domain traffic to our VPS. Currently, accessing your VPS's IP address directly still shows the default Nginx page because the request isn’t originating from the domain name we configured.

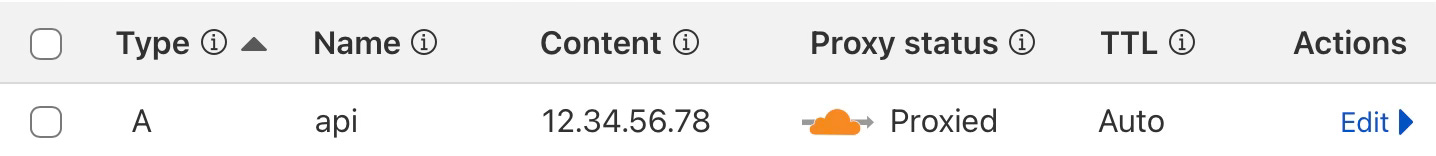

At your domain registrar's DNS settings, you'll need to add one of the following:

An

Arecord if your VPS has a static IP:

Type: A

Name: api.kkyri.com

Value: 12.34.56.78A

CNAMErecord if your VPS provider gives you a domain name:

Type: CNAME

Name: api.kkyri.com

Value: kkyri-api.provider.comMost VPS providers assign static IPs, making the A record the more common choice. However, if you're using a platform that provides a domain name by default instead, use a CNAME record.

After configuring DNS, changes can take anywhere from a few seconds to several hours to propagate through the DNS network. Once propagation is complete, visiting your domain should show your application's response:

Hello, World!Debugging connection issues

If your application isn't accessible through your domain, there are several layers to check. Let's go through them systematically.

DNS resolution

First, verify your DNS configuration using the dig command:

# For A records

$ dig api.kkyri.com A

;; ANSWER SECTION:

api.kkyri.com 60 IN A 12.34.56.78

# For CNAME records

$ dig api.kkyri.com CNAME

;; ANSWER SECTION:

api.kkyri.com 60 IN CNAME kkyri-api.provider.comLook for the ANSWER sections and verify they’re pointing to the expected IP address or domain.

Nginx logs

Nginx maintains two primary log files that are useful for debugging:

Access logs show incoming requests. Each request should result in a log line:

$ sudo tail -f /var/log/nginx/access.log

192.168.1.1 - - [27/Oct/2024:21:15:23 +0000] "GET / HTTP/1.1" 200 ...Error logs show configuration issues or other errors:

$ sudo cat /var/log/nginx/error.logApplication logs

If DNS resolves correctly and Nginx logs show incoming requests, check your application:

Verify the container is running:

$ docker ps

CONTAINER ID IMAGE STATUS PORTS NAMES

9e28276170ab app Up 30 minutes 0.0.0.0:8080->8080/tcp app-1Check container logs:

$ docker logs app-1

Server is listening on :8080...If it’s not doing it already, consider modifying your application code to log incoming requests, to assist you with debugging. Remember to restart your container after any code changes.

This layered approach helps isolate whether the issue is with DNS resolution, Nginx configuration, or the application itself.

Enabling HTTPS with Certbot

Prerequisites

Before proceeding, ensure:

Your domain is correctly pointing to your VPS (verify with

digand/or your browser)Nginx is properly configured and serving requests

Port 80 and 443 is open in your firewall

Installing Certbot

To serve traffic over HTTPS, we need TLS certificates. Let's Encrypt provides these certificates for free, and we'll use Certbot to automate their management. We'll also use Certbot's Nginx plugin to automatically configure TLS in our Nginx server.

Install Certbot and the Nginx plugin:

$ sudo apt install certbot python3-certbot-nginxGenerate a certificate for your domain:

$ sudo certbot --nginx -d api.kkyri.comThe domain you pass to Certbot must be the same domain you configured in your Nginx server block.

This command does several things:

Verifies your domain ownership

Generates TLS certificates

Updates your Nginx configuration to use these certificates

Sets up automatic HTTP to HTTPS redirection

Certificates are valid for 90 days. Certbot sets up automatic renewal via a systemd timer:

$ sudo systemctl status certbot.timer

● certbot.timer - Run certbot twice daily

Loaded: loaded (/lib/systemd/system/certbot.timer; enabled; vendor preset: enabled)

Active: active (waiting) since Sat 2024-10-12 10:49:21 UTC; 2min 52s ago

Trigger: Sat 2024-10-12 16:19:52 UTC; 5h 27min left

Triggers: ● certbot.service

Oct 12 10:49:21 vps systemd[1]: Started Run certbot twice daily.(Optional) To test the renewal process:

$ sudo certbot renew --dry-runThis will simulate a renewal for your domain.

To understand the changes that Certbot made to your Nginx configuration, open it:

$ cat /etc/nginx/sites-available/api.kkyri.comCertbot has added:

Certificate paths

TLS configuration

HTTP to HTTPS redirect

Port 443 listener

Note that Nginx still communicates with your backend service over HTTP internally. This is secure as it happens within your local network, and it simplifies your application architecture by handling TLS termination at the Nginx level.

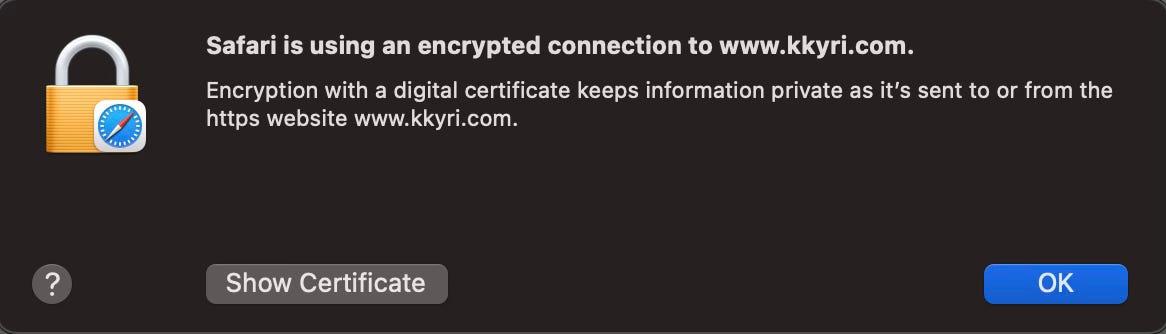

Your site should now be accessible via HTTPS, with browser security indicators confirming the valid certificate.

Summary

Well done! The setup is now complete. You have:

A reverse proxy handling incoming traffic

Automatic HTTPS encryption with Let's Encrypt certificates, and automatic renewal

Your domain properly configured and routing to your VPS

Routing completely decoupled from your service

The infrastructure you’ve configured thus far is secure and maintainable while remaining simple. From here, you could explore features like rate limiting, monitoring, or hosting additional services on the same VPS.

The best part? You did it all yourself. Until next time!

Hi Kyri! Thank you for your wonderful articles. I've followed each one so far, and they’ve been such a gentle introduction to setting up my first VPS. I’d heard many of these concepts before, but your guides helped me truly understand them with hands-on experience. They've solidified my understanding and encouraged me to explore further on my own. I’m excited to read more from you and keep learning about this space!